Confronting U.S. History

German philosopher Georg Hegel told us, “The only thing we learn from history is that we learn nothing from history.”

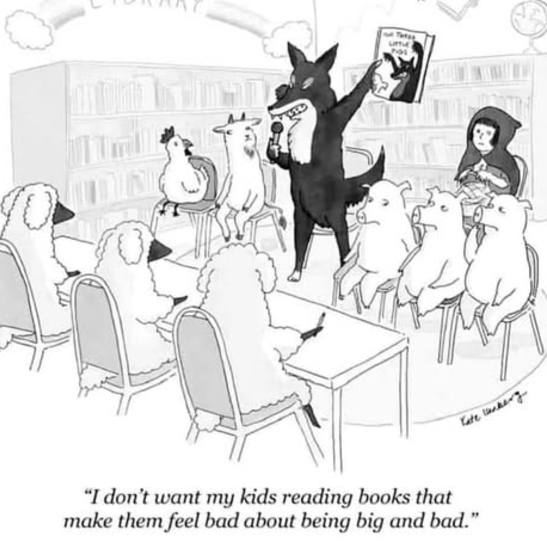

How could this turn out any differently, when we deliberately lie about our past? What’s the matter with telling the truth?